RL and Tabu Search for Graph Coloring Problems

Supervisors: Philippe Preux, Nathan Grinsztajn

Duration: 5 to 6 months

When: Spring-Summer 2021

Where: Scool (previously SequeL), Inria Lille, Villeneuve d’Ascq, France

Expected background: master in CS, specialized in machine learning.

Keywords: reinforcement learning, combinatorial optimization, experimental.

Context: Reinforcement learning is a sub-field of machine learning in which we aim at designing agents that learn to act. Acting usually involves performing a sequence of actions in order to achieve a goal. Examples are countless; games are good examples, like Pacman or chess in which the player has to perform a series of actions either to reach a maximal score, or to defeat his opponent. Applications of RL go way beyond games.

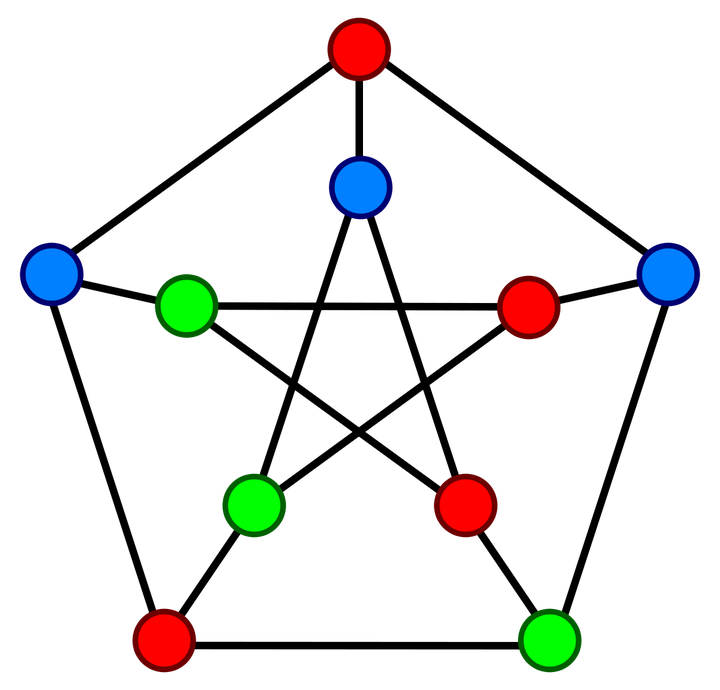

Recently, there were trials to use RL to solve hard combinatorial problems. Some major examples (and successes) are GO, Rubiks Cube, Scheduling, Maximum Independent Set (MIS), Maximum Coverage (MC), Minimum Vertex Cover (MVC)… Graph coloring, however useful in practice (bandwidth allocation, scheduling…) and difficult, received less attention. State of the art graph coloring solvers still mainly rely on tabu search (tabucol), and hand-crafted heuristics. It is to be expected that hybriding RL and tabu search will provide more efficient algorithms.

What: The goal of this internship is to:

- Study the literature of this problem. This includes deep reinforcement learning, graph neural networks, graph coloring, tabu search.

- Perform an experimental assessment of the ideas.

- Explore new ideas on combining RL and tabu search (or other search algorithms). This exploration can be theoretical or algorithmic.

Bibliography:

- Sutton, Barto, Reinforcement Learning, an Introduction, 2nd edition, 2018.

- Battaglia et al. Relational Inductive Biases, Deep Learning, and Graph Networks ArXiv:1806.01261, October 17, 2018.

- A. Hertz, D. de Werra, Using tabu search techniques for graph coloring. Computing 39, 345–351 (1987).

Working environment: Scool (previously SequeL) is a well-known research group in reinforcement learning and bandits. It is composed of 5 permanent researchers, 20+ PhD students, a couple of post-docs and engineers. Scool provides a very rich and stimulating for doing cutting-edge research in RL.